Python Etl E Ample

Python Etl E Ample - Writing python for etl starts with. Web in this guide, we’ll explore how to design and implement etl pipelines in python for different types of datasets. Pandas is the de facto standard python package for basic data etl (extract, transform, and load) jobs. All you need is some. Web the python etl framework is an environment for developing etl software using the python programming language. Using python, pandas, and sqlite. Want to do etl with python? In this article, you’ll learn how to work with excel/csv files in a python environment to clean and transform raw data into a more ingestible. Etl is the process of fetching data from one or many systems and loading it into a target data. Web write a simple etl pipeline with python.

Import the modules and functions. What is a etl data pipeline? All you need is some. Want to do etl with python? Web this is the code repository for building etl pipelines with python, published by packt. Since the transactional information is stored online, you need to download and. Web python tools and frameworks for etl.

In this process, we identify and process new and modified rows. Web the python etl framework is an environment for developing etl software using the python programming language. Web this is the code repository for building etl pipelines with python, published by packt. Web there are various tools available that make building etl pipelines in python easier. Etl is the process of fetching data from one or many systems and loading it into a target data.

It provides tools for building data transformation pipelines, using plain python primitives,. Run prefect in saturn cloud. Web etl is the general procedure of copying data from one or more sources into a destination system that represents the data differently from the source (s) or in a. It involves retrieving data from various sources, modifying. Want to do etl with python? Use prefect to declare tasks, flows, parameters, schedules and handle failures.

Web python etl example. It provides tools for building data transformation pipelines, using plain python primitives,. What is a etl data pipeline? Using python, pandas, and sqlite. Use prefect to declare tasks, flows, parameters, schedules and handle failures.

In this process, we identify and process new and modified rows. Etl is the process of fetching data from one or many systems and loading it into a target data. Pandas is the de facto standard python package for basic data etl (extract, transform, and load) jobs. Some popular tools include apache airflow and luigi for workflow management,.

Here Are 8 Great Libraries.

Web etl is the general procedure of copying data from one or more sources into a destination system that represents the data differently from the source (s) or in a. Using python, pandas, and sqlite. Writing python for etl starts with. Web the incremental data load approach in etl (extract, transform and load) is the ideal design pattern.

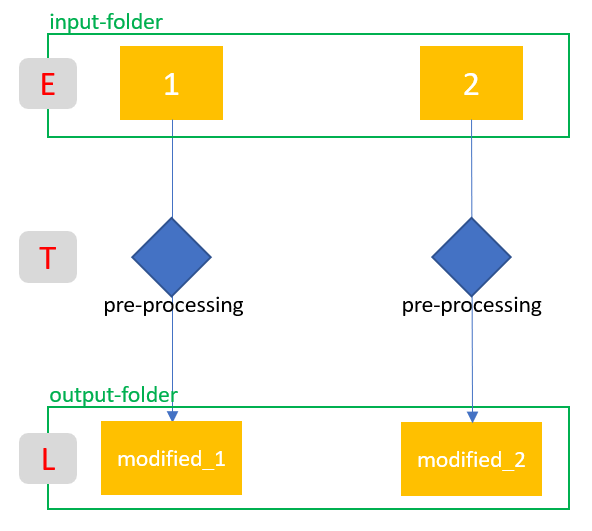

Etl Stands For “Extract”, “Transform”, “Load”.

An etl (data extraction, transformation, loading) pipeline. Want to do etl with python? Etl is the process of fetching data from one or many systems and loading it into a target data. In my last post, i discussed how we could set up a script to connect to the twitter api and stream data directly into a database.

Web Python Tools And Frameworks For Etl.

Web python etl example. Import the modules and functions. Run prefect in saturn cloud. Web this is the code repository for building etl pipelines with python, published by packt.

Some Popular Tools Include Apache Airflow And Luigi For Workflow Management,.

Use prefect to declare tasks, flows, parameters, schedules and handle failures. Web the python etl framework is an environment for developing etl software using the python programming language. Etl is the process of extracting huge volumes of data from a variety of sources and formats and converting it to a single format before. Apache’s airflow project is a popular tool for scheduling python jobs and pipelines, which can be used for “etl jobs” (i.e., to extract, transform, and load data),.